Guidelines on the Production and Preservation of Digital Audio Objects (web edition)

printed edition | web edition

This is the  English language web edition of IASA-TC 04 (Second Edition, 2009), an accepted authority on digital audio preservation in the sound archiving field. It is available in Paper and PDF copies and also available online in other languages.

English language web edition of IASA-TC 04 (Second Edition, 2009), an accepted authority on digital audio preservation in the sound archiving field. It is available in Paper and PDF copies and also available online in other languages.

Recommended citation style:

IASA Technical Committee, Guidelines on the Production and Preservation of Digital Audio Objects, ed. by Kevin Bradley. Second edition 2009. (= Standards, Recommended Practices and Strategies, IASA-TC 04). www.iasa-web.org/tc04/audio-preservation

Table of contents

Publication information

IASA Technical Committee

Standards, Recommended Practices and Strategies

Guidelines on the Production and Preservation of Digital Audio Objects

IASA-TC04, Second Edition

Edited by Kevin Bradley

Contributing authors

Kevin Bradley, National Library of Australia, President IASA and Vice Chair IASA TC; Mike Casey, Indiana University; Stefano S. Cavaglieri, Fonoteca Nazionale Svizzera; Chris Clark, British Library (BL); Matthew Davies, National Film and Sound Archive (NFSA); Jouni Frilander, Finnish Broadcasting Company; Lars Gaustad, National Library of Norway and Chair IASA TC; Ian Gilmour, NFSA; Albrecht Hefner, Sudwestrudfunk, Germany; Franz Lechleitner, Phonogrammarchiv of the Austrian Academy of Sciences (OAW); Guy Marechal, PROSIP; Michel Merten, Memnon; Greg Moss, NFSA; Will Prentice, BL; Dietrich Schuller, OAW; Lloyd Stickells and Nadia Wallaszkovits, OAW.

Reviewed by the IASA Technical Committee which included at the time (in addition to those above)

Tommy Sjoberg, Folkmusikens Hus, Sweden; Bruce Gordon, Harvard University; Bronwyn Officer, National Library of New Zealand; Stig L. Molneryd,The National Archive of Recorded Sound and Moving Images, Sweden; George Boston; Drago Kunej, Slovenian Academy of Sciences and Arts; Nigel Bewley, BL; Jean-Marc Fontaine, Laboratoire d’Acoustique Musicale; Chris Lacinak; Gilles St. Laurent, Library and Archives, Canada; and Xavier Sene, Bibliotheque Nationale de France.

Published by the International Association of Sound and Audio Visual Archives

c/o Secretary-General:

Ilse Assmann

Media Libraries

South African Broadcasting Corporation

PO Box 931, 2006 Auckland Park

South Africa

1st Edition Published 2004

2nd Edition Published 2009

IASA-TC04 Guidelines in the Production and Preservation of Digital Audio Objects: standards, recommended practices, and strategies: 2nd edition/ edited by Kevin Bradley

This publication provides guidance to audiovisual archivists on a professional approach to the production and preservation of digital audio objects

Includes bibliographical references and index

ISBN 978-91-976192-2-6

Copyright: International Association of Sound and Audio Visual Archives (IASA) 2009

Translation is not permitted without the consent of the IASA Executive Board and may only be undertaken in accordance with the Guidelines & Policy Statement,Translation of Publications Guidelines, Guidelines for the Translation of IASA Publications & Workflow for Translations

www.iasa-web.org/guidelines-translating-iasa-publications

This publication is approved by the Sub-Committee on Technology of the Memory of the World Programme of UNESCO

Translation sponsor:

Gold sponsor:

Silver sponsor:

Bronze sponsors:

|

|

|

Preface to the Second Edition

The process of debating the principles which underpin the work of sound preservation, and then discussing, codifying and documenting the practices that we as professional sound archivists use and recommend, is to necessarily identify the strengths and weaknesses in our everyday work.When the first version/edition of TC-04 Guidelines in the Production and Preservation of Digital Audio Objects was completed and printed in 2004 there was, in spite of our pride in that previous publication, little doubt amongst the IASA Technical Committee that a second edition would be necessary to address those areas that we knew we would need to be working on. In the intervening four years we as a committee have grown, extending our knowledge and expertise in many areas, and helped to develop the standards and systems which implement sustainable work and preservation practices. This second edition is the beneficiary of that work, and it contains much that is vital in the evolving field of sustainable sound preservation by digital means.

Though we have incorporated much new information, and refined some of the fundamental chapters, the advice provided in the second edition does not stand in opposition to that provided in the first. The IASA-TC 04 Guidelines in the Production and Preservation of Digital Audio Objects is informed by IASA-TC 03 The Safeguarding of the Audio Heritage: Ethics, Principles and Preservation Strategy. A revised version of TC 03 was published in 2006 which took account of new developments in digital audio archiving, and of the much more practical role of TC 04. TC 03 2006 concentrates on the principles and supersedes the earlier versions, and these guidelines are the practical embodiment of the principles.

The major amendments in this second edition of TC 04 can be found in the chapters dealing with the digital repositories and architectures. Chapter 3, Metadata, has been extensively enlarged and provides significant and detailed advice on approaches to the management of data and metadata for the purposes of preservation, reformatting, analysis, discovery and use. The chapter ranges widely across the subject area from schemas through to structures to manage and exchange the content and considers the major building blocks of data dictionaries, schemas, ontologies, and encodings. The sibling to this is Chapter 4, Unique and Persistent identifiers, and it provides guidance on naming and numbering of files and digital works.

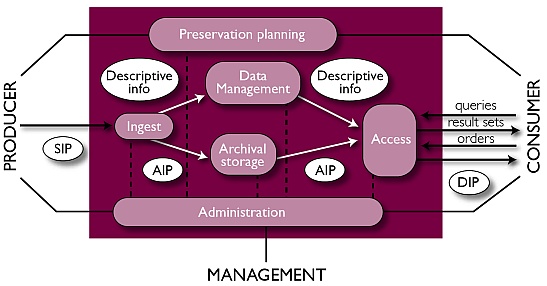

The new section included as Chapter 6 Preservation Target Formats and Systems, is structured around the functional categories identified in the Reference Model for an Open Archival Information System (OAIS): Ingest,Archival Storage, Preservation Planning,Administration and Data Management, and Access, and each of the subsequent Sections deals with each subject area. The use of this conceptual model in organising the book has two important consequences: Firstly, it uses the same functional categories as the architectural design of the major repository and data management systems, which means it has real world relevance. Secondly, identifying separate and abstracted components of a digital preservation strategy allows the archivist to make decisions about various parts of the process, rather than trying to solve and implement the monolithic whole. Chapter 9, Partnerships, Project Planning and Resources, is an entirely new chapter, and provides advice on the issues to consider if a collection manager decides to outsource all or part of the processes involved in the preservation of the audio collections.

Chapter 7, Small Scale Approaches to Digital Storage Systems, considers how to build a low cost digital management system which, while limited in scope, still adheres to the principles and quality measures identified in chapter 6.

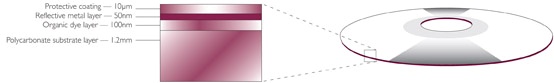

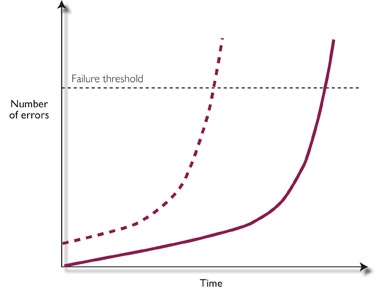

Chapter 8 revisits the risks associated with optical disc storage and makes recommendations as to their management, while suggesting the advice in chapters 6 and 7 may be more useful in the long term management of digital content.

Chapter 5, Signal Extraction from Original Carriers, was one of the most practical and informed components of the first edition, and it remains a source of practical knowledge, and information on standards and advice. As part of the review process the chapters on signal extraction have been refined, and extra useful advice has been added. An extra section, 5.7 Field Recording Technologies and Archival Approaches, has been added, and it addresses the question of how to create a sound recording in the field for which the content is intended for long term archival storage.

Chapter 2, Key Digital Principles, adheres to the same standards expressed in the earlier edition. There is, however, more explanatory detail, and technical information, particularly regarding the digital conversion processes, has been provided in more precise and standard language.

TC 04 represents a considerable effort and commitment from the IASA Technical Committee, not only those who created the original text, but also those who reviewed and analysed the chapters until we reached a satisfactory explanation. To my friends and colleagues in the TC goes my respect for the detailed knowledge and gratitude for their generosity in sharing it. The quality of this new edition is a testament to their expertise.

Kevin Bradley

Editor

November 2008

Introduction to the First Edition

Digital audio has, over the past few years, reached a level of development that makes it both effective and affordable for use in the preservation of audio collections of every magnitude. The integration of audio into data systems, the development of appropriate standards, and the wide acceptance of digital audio delivery mechanisms have replaced all other media to such an extent that there is little choice for sound preservation except digital storage approaches. Digital technology offers the potential to provide an approach that addresses many of the concerns of the archiving community through lossless cloning of audio data through time. However, the processes of converting analogue audio to digital, transferring to storage systems, of managing and maintaining the audio data, providing access and ensuring the integrity of the stored information, present a new range of risks that must be managed to ensure that the benefits of digital preservation and archiving are realised. Failure to manage these risks appropriately may result in significant loss of data, value and even audio content.

This publication of the Technical Committee of the International Association of Sound and Audiovisual Archives (IASA) "Guidelines on the Production and Preservation of Digital Audio Objects" is intended to provide guidance to audiovisual archivists on a professional approach to the production and preservation of digital audio objects. It is the practical outcome of the previous IASA Technical Committee paper, IASA-TC 03 "The Safeguarding of the Audio Heritage: Ethics, Principles and Preservation Strategy, Version 2, September 2001". The Guidelines addresses the production of digital copies from analogue originals for the purposes of preservation, the transfer of digital originals to storage systems, as well as the recording of original material in digital form intended for long-term archival storage. Any process of digitisation is selective, the audio content itself supplies more information to potential users than is contained in the intended signal and the standards of analogue to digital conversion fix the limits of the resolution of the audio permanently and, unless carefully undertaken, partially.

There are three main parts to the content of the Guidelines:

- Standards, Principles and Metadata

- Signal Extraction from Originals

- Target Formats

Standards, Principles and Metadata: Of the four basic tasks that are performed by all archives - acquisition, documentation, access, preservation, the primary task is to preserve the information placed in the care of the collection (IASA-TC 03, 2001). However, if the tasks of acquisition and documentation are undertaken in combination with a well planned digital preservation strategy that adheres to adequate standards, the task of providing access is facilitated by the process. Long term access is a product of appropriate preservation.

Adherence to widely used and accepted standards suitable for the preservation of digital audio is a fundamental necessity of audio preservation. The IASA Guidelines recommend linear PCM (pulse code modulation) (interleaved for stereo) in a .wav or preferably BWF .wav file (EBU Tech 3285) for all two track audio. The use of any perceptual coding (“lossy compression") is strongly discouraged. It is recommended that all audio be digitised at 48 kHz or higher, and with a bit depth of at least 24 bit. Analogue to Digital (A/D) conversion is a precision process, and low cost converters integrated into computer sound cards cannot meet the demands of archival preservation programs.

Once encoded as a data file, the preservation of the audio faces many of the same issues as those of all digital data, and foremost in managing these is the assigning of a unique Persistent Identifier (PI) and providing appropriate metadata. Metadata is not just the descriptive information that allows the user or archive to identify the content, but also includes the technical information that enables the recognition and replaying of the audio, and the preservation metadata that retains information about the processes that went to generate the audio file. It is only thus that the integrity of the audio content can be guaranteed. The digital archive will depend on comprehensive metadata to maintain its collection. A well planned digital archive will automate the production of much of the metadata and should include the original carrier, its format and state of preservation, replay equipment and parameters, the digital resolution, format, all equipment used, the operators involved in the process and any processes or procedures undertaken.

Signal Extraction from Originals: “Sound archives have to ensure that, in the replay process, the recorded signals can be retrieved to the same, or a better, fidelity standard as was possible when they were recorded... (also) carriers are the bearers of information: desired or primary information, consisting of the intended sonic content, and ancillary or secondary information which may take manifold forms. Both primary and secondary information form part of the Audio Heritage.” (IASA-TC 03, 2001).

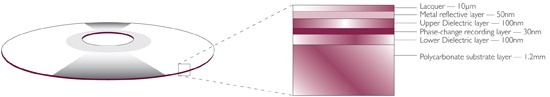

To take full advantage of the potential offered by digital audio it is necessary to adhere to the above principles and ensure that the replay of the audio original is made with a full awareness of all the possible issues. This requires knowledge of the historic audio technologies and a technical awareness of the advances in replay technology.Where appropriate, the Guidelines provide advice on the replay of historical mechanical and other obsolete formats, including, cylinders and coarse groove discs, steel wire and office dictation systems, vinyl LP records, analogue magnetic tape, cassette and reel, magnetic digital carriers such as DAT and its video tape based predecessors, and optical disk media such as CD and DVD. For each of the formats there is advice on selection of best copy, cleaning, carrier restoration, replay equipment, speed and replay equalisation, corrections for errors caused by misaligned recording equipment and removal of storage related signal artefacts and the time required to undertake a transfer to digital. All of these are important topics which are addressed in the Guidelines with a consideration of the associated ethical issues, though the latter issue is particularly significant as many digitisation plans fail to budget for the considerable time constraints of an audio transfer process.

All the parameters mentioned above must be determined objectively, and appropriate records kept of every process. Digital storage and associated technologies and standards enable an ethical approach to sound archiving by enabling the production of documentation and its storage in linked, related metadata fields.

Target Formats: Data can be stored in many ways and on many carriers and the appropriate type of technology will be dependant on the circumstances of the institution and its collection. The Guidelines provide advice and information on various suitable approaches and technologies including Digital Mass Storage Systems (DMSS) and small scale manual approaches to digital storage systems, data tape, hard disks, optical disks including CD and DVD recordable and magneto optical disks (MO).

No target format is a permanent solution to the issue of digital audio preservation, and no technological development will ever provide the ultimate solution; rather they are a step in a process whereby institutions will be responsible for maintaining data through technological changes and developments, migrating data from the current system to next for as long as the data remains valuable. The DMSS with suitable management software are the most appropriate for the long term maintenance of audio data.”Such systems permit automatic checking of data integrity, refreshment, and, finally, migration with a minimum use of human resources” (IASA-TC 03, 2001). These systems can be scaled to suit smaller archives, though this will most often result in an increased responsibility for manual data checking. Discrete storage formats such as CD and DVD recordable, and magneto optical disks (MO) are inherently less reliable. The Guidelines suggest standards and approaches to maintaining the data on these carriers, while recommending that the more reliable solutions found in integrated storage systems are to be substantially preferred.

1: Background

1.1 Audiovisual archives hold a responsibility for the preservation of cultural heritage covering all spheres of musical, artistic, sacred, scientific, linguistic and communications activity, reflecting public and private life, and the natural environment, held as published and un-published recorded sound and image.

1.2 The aim of preservation is to provide our successors and their clients with as much of the information contained in our holdings as it is possible to achieve in our professional working environment. It is the responsibility of an archive to assess the needs of its users, both current and future, and to balance those needs against the conditions and resources of the archive. The ultimate purpose of preservation is to ensure that access to the audio content of collection is available to approved users, current and future, without undue threat or damage to the audio item.

1.3 As the lifespan of all audio carriers is limited by their physical and chemical stability, as well as the availability of the reproduction technology and, as the reproduction technology itself may be a potential source of damage for many audio carriers, audio preservation has always required the production of copies that can stand for the original as preservation duplicates, which in the parlance of digital archiving have come to be known as “preservation surrogates”. The need to migrate content to another storage system applies to carriers of digital audio originals perhaps even more so as they may be endangered by the ever shorter lifetimes of highly sophisticated hardware and related software in the market, which, sometimes only a few years after their market introduction, will lead to the total obsolescence of replay equipment. However, the same constraints that apply to the original item will wholly, or in part, apply to the preservation target format, requiring continued reduplication. If preservation had continued by serial duplication in the analogue domain this would have produced a degradation of the audio signal with each subsequent generation.

1.4 The potential offered by the production of digital surrogates for the purpose of preservation seems to provide an answer to linked issues of preservation and access. However, the decisions made about digital formats, resolutions, carriers and technology systems will impose limits on the effectiveness of digital preservation that cannot be reversed, as will the quality of audio being encoded. Optimal signal extraction from original carriers is the indispensable starting point of each digitisation process. As recording media very often requires very specific replay technology, timely organisation of copying into the digital domain must take place, before obsolescence of hardware becomes critical.

1.5 The ability to recopy the captured digital copy without further loss or degradation has often led enthusiastic archivists to describe it as “eternal preservation”. The easy production of low bit-rate distribution copies broadens the ability of archives to provide access to their collections without endangering the original item. However, far from being eternal, poorly managed digital archiving practices may lead to a reduction in the effective lifespan and integrity of audio content, whereas a well managed digital conversion and preservation strategy will facilitate the realisation of the benefits promised by digital technology. Similarly, a poorly planned system requiring manual intervention may present a management task of considerable dimension that could be beyond the capabilities of the collection managers and curators and so endanger the collection. A well planned system should enable automation of the processes and so preservation can proceed in a timely manner. No system for preserving sound will provide a one-off solution; any preservation solution will require future transfers and migrations that must be planned for when the material is first digitised and stored.

1.6 The Guidelines address audio carriers such as cylinders and coarse groove discs, steel wire and office dictation systems, vinyl LP records, analogue magnetic tape, cassette and reel, magnetic digital carriers such as DAT and its video tape based predecessors, and optical disk media such as CD and DVD. Though many of the principles contained herein will be applicable, sound for film is not specifically addressed. This document does not consider piano rolls, MIDI files or other systems which are player directions rather than encoded audio. The following principles outline the areas in which critical decisions must be made in the transfer to and management of digital audio materials.

2: Key Digital Principles

2.1 Standards: It is integral to the preservation of audio that the formats, resolutions, carrier and technology systems selected adhere to internationally agreed standards appropriate to the intended archival purposes. Non-standard formats, resolutions and versions may not in the future be included in the preservation pathways that will enable long term access and future format migration.

2.2 Sampling Rate: The sampling rate fixes the maximum limit on frequency response.When producing digital copies of analogue material IASA recommends a minimum sampling rate of 48 kHz for any material. However, higher sampling rates are readily available and may be advantageous for many content types. Although the higher sampling rates encode audio outside of the human hearing range, the net effect of higher sampling rate and conversion technology improves the audio quality within the ideal range of human hearing. The unintended and undesirable artefacts in a recording are also part of the sound document, whether they were inherent in the manufacture of the recording or have been subsequently added to the original signal by wear, mishandling or poor storage. Both must be preserved with utmost accuracy. For certain signals and some types of noise, sampling rates in excess of 48 kHz may be advantageous. IASA recommends 96 kHz as a higher sampling rate, though this is intended only as a guide, not an upper limit; however, for most general audio materials the sampling rates described should be adequate. For audio digital-original items, the sampling rate of the storage technology should equal that of the original item.

2.3 Bit Depth: The bit depth fixes the dynamic range of an encoded audio event or item. 24 bit audio theoretically encodes a dynamic range that approaches physical limits of listening, though in reality the technical limits of the system is slightly less. 16 bit audio, the CD standard, may be inadequate to capture the dynamic range of many types of material, especially where high level transients are encoded such as the transfer of damaged discs. IASA recommends an encoding rate of at least 24 bit to capture all analogue materials. For audio digital-original items, the bit depth of the storage technology should at least equal that of the original item. It is important that care is taken in recording to ensure that the transfer process takes advantage of the full dynamic range.

2.4 Analogue to Digital Converters (A/D)

2.4.1 In converting analogue audio to a digital data stream, the A/D should not colour the audio or add any extra noise. It is the most critical component in the digital preservation pathway. In practice, the A/D converter incorporated in a computer’s sound card can not meet the specifications required due to low cost circuitry and the inherent electrical noise in a computer. IASA recommends the use of discrete (stand alone) A/D converters connected via an AES/EBU or S/PDIF interface, IEEE1394 bus-connected (firewire) discrete A/D converters or USB serial interface-connected discrete A/D converters that will convert audio from analogue to digital in accordance with the following specification. All specifications are measured at the digital output of the A/D converter, and are in accordance with Audio Engineering Society standard AES 17-1998 (r2004), IEC 61606-3, and associated standards as identified.

2.4.1.1 Total Harmonic Distortion + Noise (THD+N)

With signal 997 Hz at -1 dB FS, the A/D converter THD+N will be less than -105 dB unweighted, -107 dB A-weighted, 20 Hz to 20 kHz bandwidth limited.

With signal 997 Hz at -20 dB FS, the A/D converter THD+N will be less than -95 dB unweighted, -97 dB A-weighted, 20 Hz to 20 kHz bandwidth limited.

2.4.1.2. Dynamic Range (Signal to Noise)

The A/D converter will have a dynamic range of not less than 115 dB unweighted, 117 dB A-weighted. (Measured as THD+N relative to 0 dB FS, bandwidth limited 20 Hz to 20 kHz, stimulus signal 997 Hz at -60 dB FS).

2.4.1.3. Frequency Response

For an A/D sampling frequency of 48 kHz, the measured frequency response will be better than ± 0.1 dB for the range 20 Hz to 20 kHz.

For an A/D sampling frequency of 96 kHz, the measured frequency response will be better than ± 0.1 dB for the range 20Hz to 20 kHz, and ± 0.3 dB for the range 20 kHz to 40 kHz.

For an A/D sampling frequency of 192 kHz, the frequency response will be better than ± 0.1 dB for the range 20Hz to 20 kHz, and ± 0.3 dB from 20 kHz to 50 kHz (reference audio signal = 997 Hz, amplitude -20 dB FS).

2.4.1.4 Intermodulation Distortion IMD (SMPTE/DIN/AES17)

The A/D converter IMD will not exceed -90 dB. (AES17/SMPTE/DIN twin-tone test sequences, combined tones equivalent to a single sine wave at full scale amplitude).

2.4.1.5 Amplitude Linearity

The A/D converter will exhibit amplitude gain linearity of ± 0.5 dB within the range -120 dB FS to 0 dB FS. (997 Hz sinusoidal stimuli).

2.4.1.6 Spurious Aharmonic Signals

Better than -130 dB FS with stimulus signal 997 Hz at -1 dBFS

2.4.1.7 Internal Sample Clock Accuracy

For an A/D converter synchronised to its internal sample clock, frequency accuracy of the clock measured at the digital stream output will be better than ±25 ppm.

2.4.1.8 Jitter

Interface jitter measured at A/D output <5ns.

2.4.1.9 External Synchronisation

Where the A/D converter sample clock will be synchronised to an external reference signal, the A/D converter must react transparently to incoming sample rate variations ± 0.2% of the nominal sample rate. The external synchronistation circuit must reject incoming jitter so that the synchronised sample rate clock is free from artefacts and disturbances.

2.4.2 IEE1394 and USB Audio Interfaces. Many A/D converters now provide the facilities to directly interface to a host computer via the high speed IEEE1394 (firewire) and USB 2.0 serial interfaces. Both systems are successfully implemented as audio transmission interfaces across the major personal computer platforms, and can reduce the requirement to install a specialised, high-quality soundcard interface in the computer chassis. Audio quality is generally independent of the bus technology in use.

2.4.3 Selection of A/D Converters: The A/D converter is the most critical piece of technology in the digital preservation pathway.When choosing a convertor, and before any further evaluation is undertaken, IASA recommends that all specifications are tested against the reference standards described above. Any converter which does not meet the basic IASA technical specifications will produce less than accurate conversions. In conjunction with technical evaluation, statistically valid blind listening tests should be carried out on short listed converters to determine overall suitability and performance. All the specifications and testing described above are stringent and complex, and these specifications are highly important in selecting and evaluating analogue to digital convertors. The published specifications from the equipment manufacturers are sometimes challenging to compare, often incomplete and occasionally difficult to reconcile with the performance of the device they purport to represent. It may suit certain communities or groups to undertake group or panel testing to maximise resources. Certain institutions, such as state archives, libraries or academic science departments may be in a position to assist with testing.

2.5 Sound Cards: The sound card used in a computer for the purposes of audio preservation should have a reliable digital input with a high quality digital audio stream synchronisation mechanism, and pass a digital audio data stream without change or alteration. As a discrete (stand alone) A/D converter must be used, the primary purpose of a sound card in audio preservation is in passing a digital signal to the computer data bus, though it may also be used for returning the converted signal to analogue for monitoring purposes. Care should be taken in choosing a card that accepts the appropriate sampling and bit rates, and does not inject noise or other extraneous artefacts. IASA recommends the use of a high quality sound card that meets the following specification:

2.5.1 Sample rate support: 32 kHz to 192 kHz +/- 5%.

2.5.2 Digital audio quantisation: 16-24 bits.

2.5.3 Varispeed: automatic by incoming audio or wordclock.

2.5.4 Synchronisation: internal clock, wordclock, digital audio input.

2.5.5 Audio interface: high speed AES/EBU conforming to AES3 specifications.

2.5.6 Jitter acceptance and signal recovery on inputs up to 100ns without error.

2.5.7 Digital audio subcode pass-through.

2.5.8 Optional timecode inputs.

2.6 Computer Based Systems and Processing Software: Recent generations of computers have sufficient power to manipulate large audio files. Once in the digital domain, the integrity of the audio files should be maintained. As noted above the critical points in the preservation process are converting the analogue audio to digital (which relies on the A/D converter), and entering the data into the system, either through the sound card or other data port. However, some systems truncate the word length of an item in order to process it, resulting in a lower effective bit rate and others may only process compressed file formats, such as MP3, neither of which is acceptable. IASA recommends that a professional audio computer based system be used whose processing word length exceeds that of the file (i.e. greater than 24 bit) and which does not alter the file format.

2.7 Data Reduction: It has become generally accepted in audio archiving that when selecting a digital target format, formats employing data reduction (frequently mistakenly called data “compression”) based on perceptual coding (lossy codecs) must not be used. Transfers employing such data reduction mean that parts of the primary information are irretrievably lost. The results of such data reduction may sound identical or very similar to the unreduced (linear) signal, at least for the first generation, but the further use of the data reduced signal will be severely restricted and its archival integrity has been compromised.

2.8 File Formats

2.8.1 There are a number of linear audio file formats that may be used to encode audio, however, the wider the acceptance and use of the format in a professional audio environment, the greater the likelihood of long term acceptance of the format, and the greater the probability of professional tools being developed to migrate the format to future file formats when that becomes necessary. Because of the simplicity and ubiquity of linear Pulse Code Modulation (PCM) [interleaved for stereo] IASA recommends the use of WAVE, (file extension .wav) developed by Microsoft and IBM as an extension from the Resource Interchange File Format (RIFF). Wave files are widely used in the professional audio industry.

2.8.2 BWF .wav files [EBU Tech 3285] are an extension of .wav and are supported by most recent audio technology. The benefit of BWF for both archiving and production uses is that metadata can be incorporated into the headers which are part of the file. In most basic exchange and archiving scenarios this is advantageous; however, the fixed nature of the embedded information may become a liability in large and sophisticated data management systems (see discussion chapter 3 Metadata and Ch 7 Small Scale Approaches to Digital Storage Systems). This, and other limitations with BWF, can be managed by using only a minimal set of data within BWF and maintaining other data with external data management systems. AES31-2-2006, the AES standard on “Network and file transfer of audio - Audio-file transfer and exchange - File format for transferring digital audio data between systems of different type and manufacture” is largely compatible with the standard set in BWF, and its is expected that future development in the area will continue to make the format viable. The BWF format is widely accepted by the archiving community and with the limitations described in mind IASA recommends the use of BWF .wav files [EBU Tech 3285] for archival purposes.

2.8.3 Multitrack audio and film or video soundtracks, or large audio files, may use RF64 [EBU Tech 3306], which is compatible with BWF,AES-31 or as a wav file in an Media Exchange Format (MXF) wrapper. As these are all still under development, one pragmatic approach may be to create multiple time coherent mono BWF files wrapped in the tar (tape archive) format.

2.9 Audio Path: The combination of reproduction equipment, signal cables, mixers and other audio processing equipment should have specifications that equal or exceed that of digital audio at the specified sampling rate and bit depth. The replay equipment, audio path, target format and standards must exceed that of the original carrier.

3: Metadata

3.1 Introduction

3.1.1 Metadata is structured data that provides intelligence in support of more efficient operations on resources, such as preservation, reformatting, analysis, discovery and use. It operates at its best in a networked environment, but is still a necessity in any digital storage and preservation environment. Metadata instructs end-users (people and computerised programmes) about how the data are to be interpreted. Metadata is vital to the understanding, coherence and successful functioning of each and every encounter with the archived object at any point in its lifecycle and with any objects associated with or derived from it.

3.1.2 It will be helpful to think about metadata in functional terms as “schematized statements about resources: schematized because machine understandable, [as well as human readable]; statements because they involve a claim about the resource by a particular agent; resource because any identifiable object may have metadata associated with it” (Dempsey 2005). Such schematized (or encoded) statements (also referred to as metadata ‘instances’) may be very simple, a single Uniform Resource Identifier (URI), within a single pair of angle brackets < > as a container or wrapper and a namespace. Typically they may become highly evolved and modular, comprising many containers within containers, wrappers within wrappers, each drawing on a range of namespace schemas, and assembled at different stages of a workflow and over an extended period of time. It would be most unusual for one person to create in one session a definitive, complete metadata instance for any given digital object that stands for all time.

3.1.3 Regardless of how many versions of an audio file may be created over time, all significant properties of the file that has archival status must remain unchanged. This same principle applies to any metadata embedded in the object (see section 3.1.4 below). However, data about any object are changeable over time: new information is discovered, opinions and terminology change, contributors die and rights expire or are re-negotiated. It is therefore often advisable to keep audio files and all or some metadata files separate, establish appropriate links between them, and update the metadata as information and resources become available. Editing the metadata within a file is possible, though cumbersome, and does not scale up as an appropriate approach for larger collections. Consequently, the extent to which data is embedded in the files as well as in separate data management system will be determined by the size of the collection, the sophistication of the particular data management system, and the capabilities of the archive personnel.

3.1.4 Metadata may be integrated with the audio files and is in fact suggested as an acceptable solution for a small scale approach to digital storage systems (see section 7.4 Basic Metadata). The Broadcast Wave Format (BWF) standardized by the European Broadcasting Union (EBU), is an example of such audio metadata integration, which allows the storage of a limited number of descriptive data within the .wav file (see section 2.8 File Formats). One advantage of storing the metadata within the file is that it removes the risk of losing the link between metadata and the digital audio. The BWF format supports the acquisition of process metadata and many of the tools associated with that format can acquire the data and populate the appropriate part of the BEXT (broadcast extension) chunk.The metadata might therefore include coding history, which is loosely defined in the BWF standard, and allows the documentation of the processes that lead to the creation of the digital audio object. This shares similarities with the event entity in PREMIS (see 3.5.2 ,3.7.3 and Fig.1 ).When digitizing from analogue sources the BEXT chunk can also be used to store qualitative information about the audio content. When creating a digital object from a digital source, such as DAT or CD, the BEXT chunk can be used to record errors that might have occurred in the encoding process.

A=<ANALOGUE> Information about the analogue sound signal path A=<PCM> Information about the digital sound signal path F=<48000, 441000, etc.> Sampling frequency [Hz] W=<16, 18, 20, 22, 24, etc.> Word length [bits] M=<mono, stereo, 2-channel> Mode T=<free ASCII-text string> Text for comments Coding History Field: BWF (http://www.ebu.ch/CMSimages/en/tec_text_r98-1999_tcm6-4709.pdf) ,> A=ANALOGUE, M=Stereo,T=Studer A820;SN1345;19.05;Reel;AMPEX 406 A=PCM, F=48000,W=24, M=Stereo,T=Apogee PSX-100;SN1516;RME DIGI96/8 Pro A=PCM, F=48000,W=24, M=Stereo,T=WAV A=PCM, F=48000,W=24, M=stereo,T=2006-02-20 File Parser brand name A=PCM, F=48000,W=24, M=stereo,T=File Converter brand name 2006 -02-20; 08:10:02

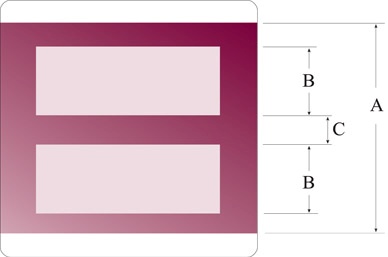

Fig. 1 National Library of Australia’s interpretation of the coding history of an original reel converted to BWF using database and automated systems.

3.1.5 The Library of Congress has been working on formalising and expanding the various data chunks in the BWF file. Embedded Metadata and Identifiers for Digital Audio Files and Objects: Recommendations for WAVE and BWF Files Today is their latest draft available for comment at http://home.comcast.net/~cfle/AVdocs/Embed_Audio_081031.doc. AES X098C is another development in the documentation of process and digital provenance metadata.

3.1.6 There are however, many advantages to maintaining metadata and content separately, by employing, for instance a framework standard such as METS (Metadata Encoding and Transmission Standard see section 3.8 Structural Metadata – METS). Updating, maintaining and correcting metadata is much simpler in a separate metadata repository. Expanding the metadata fields so as to incorporate new requirements or information is only possible in an extensible, and separate, system, and creating a variety of new ways of sharing the information requires a separate repository to create metadata the can be used by those systems. For larger collections the burden of maintaining metadata only in the headers of the BWF file would be unsustainable. MPEG-7 requires that audio content and descriptive metadata are separated, though descriptions can be multiplexed with the content as alternating data segments.

3.1.7 It is of course possible to wrap a BWF file with a much more informed metadata, and providing the information kept in BWF is fixed and limited, this approach has the advantage of both approaches. Another example of integration is the tag metadata that needs to be present in access files so that a user may verify that the object downloaded or being streamed is the object that was sought and selected. ID3, the tag used in MP3 files to describe content information which is readily interpreted by most players, allows a minimum set of descriptive metadata. And METS itself has been investigated as a possible container for packaging metadata and content together, though the potential size of such documents suggests this may not be a viable option to pursue.

3.1.8 A general solution for separating the metadata from the contents (possibly with redundancy if the contents includes some metadata) is emerging from work being undertaken in several universities in liaison with major industrial suppliers such as SUN Microsystems, Hewlett-Packard and IBM. The concept is to always store the representation of one resource as two bundled files: one including the ‘contents’ and the other including the metadata associated to that content. The second file includes:

3.1.8.1 The list of identifiers according to all the involved rationales. It is in fact a series of “aliases” pertaining to the URN and the local representation of the resource (URL).

3.1.8.2 The technical metadata (bits per sample / sampling rate; accurate format definition; possibly the associated ontology).

3.1.8.3 The factual metadata (GPS coordinates / Universal time code / Serial number of the equipment / Operator / ...).

3.1.8.4 The semantic metadata.

3.1.9 In summary, most systems will adopt a practical approach that allows metadata to be both embedded within files and maintained separately, establishing priorities (i.e. which is the primary source of information) and protocols (rules for maintaining the data) to ensure the integrity of the resource.

3.2 Production

3.2.1 The rest of this chapter assumes that in most cases the audio files and the metadata files will be created and managed separately. In which case, metadata production involves logistics – moving information, materials and services through a network cost-effectively. However, a small scale collection, or an archive in earlier stages of development, may find advantages in embedding metadata in BWF and selectively populating a subset of the information described below. If done carefully, and with due understanding of the standards and schemas discussed in this chapter, such an approach is sustainable and will be migrate- able to a fully implemented system as described below. Though a decision can be made by an archive to embed all or some metadata within the file headers, or to manage only some data separately, the information within this chapter will still inform this approach. (See also Chapter 7 Small Scale Approaches to Digital Storage Systems).

3.2.2 Until recently the producers of information about recordings either worked in a cataloguing team or in a technical team and their outputs seldom converged. Networked spaces blur historic demarcations. Needless to say, the embodiment of logistics in a successful workflow also requires the involvement of people who understand the workings and connectivity of networked spaces. Metadata production therefore involves close collaboration between audio technicians, Information Technology (IT) and subject specialists. It also requires attentive management working to a clearly stated strategy that can ensure workflows are sustainable and adaptable to the fast-evolving technologies and applications associated with metadata production.

3.2.3 Metadata is like interest - it accrues over time. If thorough, consistent metadata has been created, it is possible to predict this asset being used in an almost infinite number of new ways to meet the needs of many types of user, for multi-versioning, and for data mining. But the resources and intellectual and technical design issues involved in metadata development and management are not trivial. For example, some key issues that must be addressed by managers of any metadata system include:

3.2.3.1 Identifying which metadata schema or extension schemas should be applied in order to best meet the needs of the production teams, the repository itself and the users;

3.2.3.2 Deciding which aspects of metadata are essential for what they wish to achieve, and how granular they need each type of metadata to be. As metadata is produced for the long-term there will likely always be a trade-off between the costs of developing and managing metadata to meet current needs, and creating sufficient metadata that will serve future, perhaps unanticipated demands;

3.2.3.3 Ensuring that the metadata schemas being applied are the most current versions.

3.2.3.4 Interoperability is another factor; in the digital age, no archive is an island. In order to send content to another archive or agency successfully, there will need to be commonality of structure and syntax. This is the principle behind METS and BWF.

3.2.4 A measure of complexity is to be expected in a networked environment where responsibility for the successful management of data files is shared. Such complexity is only unmanageable, however, if we cling to old ways of working that evolved in the early days of computers in libraries and archives –before the Web and XML. As Richard Feynman said of his own discipline, physics, 'you cannot expect old designs to work in new circumstances'. A new general set of system requirements and a measure of cultural change are needed. These in turn will permit viable metadata infrastructures to evolve for audiovisual archives.

3.3 Infrastructure

3.3.1 We do not need a ‘discographic’ metadata standard: a domain-specific solution will be an unworkable constraint.We need a metadata infrastructure that has a number of core components shared with other domains, each of which may allow local variations (e.g. in the form of extension schema) that are applicable to the work of any particular audiovisual archive. Here are some of the essential qualities that will help to define the structural and functional requirements:

3.3.1.1 Versatility: For the metadata itself, the system must be capable of ingesting, merging, indexing, enhancing, and presenting to the user, metadata from a variety of sources describing a variety of objects, It must also be able to define logical and physical structures, where the logical structure represents intellectual entities, such as collections and works, while the physical structure represents the physical media (or carriers) which constitute the source for the digitized objects. The system must not be tied to one particular metadata schema: it must be possible to mix schema in application profiles (see 3.9.8) suited to the archive’s particular needs though without compromising interoperability. The challenge is to build a system that can accommodate such diversity without needless complication for low threshold users, nor prevent more complex activities for those requiring more room for manoeuvre.

3.3.1.2 Extensibility: Able to accommodate a broad range of subjects, document types (e.g. image and text files) and business entities (e.g. user authentication, usage licenses, acquisition policies, etc.). Allow for extensions to be developed and applied or ignored altogether without breaking the whole, in other words be hospitable to experimentation: implementing metadata solutions remains an immature science.

3.3.1.3 Sustainability: Capable of migration, cost-effective to maintain, usable, relevant and fit for purpose over time.

3.3.1.4 Modularity: The systems used to create or ingest metadata, and merge, index and export it should be modular in nature so that it is possible to replace a component that performs a specific function with a different component, without breaking the whole.

3.3.1.5 Granularity: Metadata must be of a sufficient granularity to support all intended uses. Metadata can easily be insufficiently granular, while it would be the rare case where metadata would be too granular to support a given purpose.

3.3.1.6 Liquidity: Write once, use many times. Liquidity will make digital objects and representations of those objects self-documenting across time, the metadata will work harder for the archive in many networked spaces and provide high returns for the original investments of time and money.

3.3.1.7 Openness and transparency: Supports interoperability with other systems. To facilitate requirements such as extensibility, the standards, protocols, and software incorporated should be as open and transparent as possible.

3.3.1.8 Relational (hierarchy/sequence/provenance): Must express parent- child relationships, correct sequencing, e.g. the scenes of a dramatic performance, and derivation. For digitized items, be able to support accurate mappings and instantiations of original carriers and their intellectual content to files. This helps ensure the authenticity of the archived object (Tennant 2004).

3.3.2 This recipe for diversity is itself a form of openness. If an open W3C (World Wide Web Consortium) standard, such as Extensible Markup Language (XML), a widely adopted mark-up language, is selected then this will not prevent particular implementations from including a mixture of standards such as Material Exchange Format (MXF) and Microsoft’s Advanced Authoring Format (AAF) interchange formats.

3.3.3 Although MXF is an open standard, in practice the inclusion of metadata in the MXF is commonly made in a proprietary way. MXF has further advantages for the broadcast industry because it can be used to professionally stream content whereas other wrappers only support downloading the complete file. The use of MXF for wrapping contents and metadata would only be acceptable for archiving after the replacement of any metadata represented in proprietary formats by open metadata formats.

3.3.4 So much has been written and said about XML that it would be easy to regard it as a panacea. XML is not a solution in itself but a way of approaching content organisation and re-use, its immense power harnessed through combining it with an impressive array of associated tools and technologies that continue to be developed in the interests of economical re-use and repurposing of data. As such, XML has become the de-facto standard for representing metadata descriptions of resources on the Internet. A decade of euphoria about XML is now matched by the means to handle it thanks to the development of many open source and commercial XML editing tools (See 3.6.2).

3.3.5 Although reference is made in this chapter to specific metadata formats that are in use today, or that promise to be useful in the future, these are not meant to be prescriptive. By observing those key qualities in section 3.3.1 and maintaining explicit, comprehensive and discrete records of all technical details, data creation and policy changes, including dates and responsibility, future migrations and translations will not require substantial changes to the underlying infrastructure. A robust metadata infrastructure should be able to accommodate new metadata formats by creating or applying tools specific to that format, such as crosswalks, or algorithms for translating metadata from one encoding scheme to another in an effective and accurate manner. A number of crosswalks already exist for formats such as MARC, MODS, MPEG-7 Path, SMPTE and Dublin Core. Besides using crosswalks to move metadata from one format to another, they can also be used to merge two or more different metadata formats into a third, or into a set of searchable indexes. Given an appropriate container/transfer format, such as METS, virtually any metadata format such as MARC-XML, Dublin Core, MODS, SMPTE (etc), can be accommodated. Moreover, this open infrastructure will enable archives to absorb catalogue records from their legacy systems in part or in whole while offering new services based on them, such as making the metadata available for harvesting – see OAI-PMH (Open Archives Initiative Protocol for Metadata Harvesting).

3.4 Design - Ontologies

3.4.1 Having satisfied those top-level requirements, a viable metadata design, in all its detail, will take its shape from an information model or ontology1. Several ontologies may be relevant depending on the number of operations to be undertaken. CIDOC’s CRM (Conceptual Reference Model http://cidoc.ics.forth.gr/) is recommended for the cultural heritage sector (museums, libraries and archives); FRBR (Functional Requirements for Bibliographic Records http://www.loc.gov/cds/downloads/FRBR.PDF) will be appropriate for an archive consisting mainly of recorded performances of musical or literary works, its influence enhanced by close association with RDA (Resource Description and Access) and DCMI (Dublin Core Metadata Initiative). COA (Contextual Ontology Architecture http://www.rightscom.com/Portals/0/Formal_Ontology_for_Media_Rights_Tran...) will be fit for purpose if rights management is paramount, as will the Motion Picture Experts Group rights management standard, MPEG-21.RDF (Resource Description Framework http://www.w3.org/RDF/), a versatile and relatively light-weight specification, should be a component especially where Web resources are being created from the archival repository: this in turn admits popular applications such as RSS (Really Simple Syndication) for information feeds (syndication). Other suitable candidates that improve the machine handling and interpretation of the metadata may be found in the emerging ‘family’ of ontologies created using OWL (Web Ontology Language). The definition of ontologies and the reading of ontologies expressed in OWL can easily be made using “Protégé”, an open tool of the Stanford University: http://protege.stanford.edu/. OWL can be used from a simple definition of terms up to a complex object oriented modelling.

1 W3C definition: An ontology defines the terms used to describe and represent an area of knowledge. Ontologies are used by people, databases, and applications that need to share domain information (a domain is just a specific subject area or area of knowledge, like medicine, tool manufacturing, real estate, automobile repair, financial management, etc.). Ontologies include computer-usable definitions of basic concepts in the domain and the relationships among them (note that here and throughout this document, definition is not used in the technical sense understood by logicians). They encode knowledge in a domain and also knowledge that spans domains. In this way, they make that knowledge reusable.

3.5 Design – Element sets

3.5.1 A metadata element set comes next in the overall design. Here three main categories or groupings of metadata are commonly described:

3.5.1.1 Descriptive Metadata, which is used in the discovery and identification of an object.

3.5.1.2 Structural Metadata, which is used to display and navigate a particular object for a user and includes the information on the internal organization of that object, such as the intended sequence of events and relationships with other objects, such as images or interview transcripts.

3.5.1.3 Administrative Metadata, which represents the management information for the object (such as the namespaces that authorise the metadata itself), dates on which the object was created or modified, technical metadata (its validated content file format, duration, sampling rate, etc.), rights and licensing information. This category includes data essential to preservation.

3.5.2 All three categories, descriptive, structural and administrative, must be present regardless of the operation to be supported, though different sub-sets of the data may exist in any file or instantiation. So, if the metadata supports preservation – “information that supports and documents the digital preservation process” (PREMIS) – then it will be rich in data about the provenance of the object, its authenticity and the actions performed on it. If it supports discovery then some or all of the preservation metadata will be useful to the end user (i.e. as a guarantor of authenticity) though it will be more important to elaborate and emphasise the descriptive, structural and licensing data and provide the means for transforming the raw metadata into intuitive displays or in readiness for harvesting or interaction by networked external users. Needless to say, an item that cannot be found can neither be preserved nor listened to so the more inclusive the metadata, with regard to these operations, the better.

3.5.3 Each of those three groupings of metadata may be compiled separately: administrative (technical) metadata as a by-product of mass-digitization; descriptive metadata derived from a legacy database export; rights metadata as clearances are completed and licenses signed. However, the results of these various compilations need to be brought together and maintained in a single metadata instance or set of linked files together with the appropriate statements relating to preservation. It will be essential to relate all these pieces of metadata to a schema or DTD (Document Type Definition) otherwise the metadata will remain just a ‘blob’, an accumulation of data that is legible for humans but unintelligible for machines.

3.6 Design – Encoding and Schemas

3.6.1 In the same way that audio signals are encoded to a WAV file, which has a published specification, the element set will need to be encoded: XML, perhaps combined with RDF, is the recommendation stated above. This specification will be declared in the first line of any metadata instance <?xml version=“1.0” encoding=“UTF-8” ?>. This by itself provides little intelligence: it is like telling the listener that the page of the CD booklet they are reading is made of paper and is to be held in a certain way. What comes next will provide intelligence (remember, to machines as well as people) about the predictable patterns and semantics of data to be encountered in the rest of the file. The rest of the metadata file header consists typically of a sequence of namespaces for other standards and schema (usually referred to as ‘extension schema’) invoked by the design.

<mets:mets xmlns:mets=“http://www.loc.gov/standards/mets/ ” xmlns:xsi=“http://www.w3.org/2001/XMLSchema-instance ” xmlns:dc=“http://dublincore.org/documents/dces/ ” xmlns:xlink=“http://www.w3.org/TR/xlink/ ” xmlns:dcterms=“http://dublincore.org/documents/dcmi-terms/ ” xmlns:dcmitype=“http://purl.org/dc/dcmitype ” xmlns:tel=“http://www.theeuropeanlibrary.org/metadatahandbook/telterms.html ” xmlns:mods=“http://www.loc.gov/standards/mods/ ” xmlns:cld=“http://www.ukoln.ac.uk/metadata/rslp/schema/ ” xmlns:blap=“http://labs.bl.uk/metadata/blap/terms.html ” xmlns:marcrel=“http://id.loc.gov/vocabulary/relators.html ” xmlns:rdf=“http://www.w3.org/1999/02/22-rdf-syntax-ns#type ” xmlns:blapsi=“http://sounds.bl.uk/blapsi.xml ” xmlns:namespace-prefix=“blapsi”>

Fig 2: Set of namespaces employed in the British Library METS profile for sound recordings

3.6.2 Such intelligent specifications, in XML, are called XML schema, the successor to DTD. DTDs are still commonly encountered on account of the relative ease of their compilation. The schema will reside in a file with the extension .xsd (XML Schema Definition) and will have its own namespace to which other operations and implementations can refer. Schemas require expertise to compile. Fortunately open source tools are available that enable a computer to infer a schema from a well-formed XML file. Tools are also available to convert xml into other formats, such as .pdf or .rtf (Word) documents into XML. The schema may also incorporate the idealised means for displaying the data as an XSLT file. Schema (and namespaces) for descriptive metadata will be covered in more detail in 3.9 Descriptive Metadata – Application Profiles, Dublin Core (DC) below.

3.6.3 To summarise the above relationships, an XML Schema or DTD describes an XML structure that marks up textual content in the format of an XML encoded file. The file (or instance) will contain one or more namespaces representing the extensionr schema that further qualify the XML structure to be deployed.

3.7 Administrative Metadata – Preservation Metadata

3.7.1 The information described in this section is part of the administrative metadata grouping. It resembles the header information in the audio file and encodes the necessary operating information. In this way the computer system recognises the file and how it is to be used by first associating the file extension with a particular type of software, and reading the coded information in the file header. This information must also be referenced in a separate file to facilitate management and aid in future access because file extensions are at best ambiguous indicators of the functionality of the file. The fields which describe this explicit information, including type and version, can be automatically acquired from the headers of the file and used to populate the fields of the metadata management system. If an operating system, now or in the future, does not include the ability to play a .wav file or read an .xml instance for example, then the software will be unable to recognise the file extension and will not be able to access the file or determine its type. By making this information explicit in a metadata record, we make it possible for future users to use the preservation management data and decode the information data. The standards being developed in AES-X098B which will be released by the Audio Engineering Society as AES57 “AES standard for audio metadata – audio object structures for preservation and restoration” codify this aspiration.

3.7.2 Format registries now exist, though are still under development, that will help to categorise and validate file formats as a pre-ingest task: PRONOM (online technical registry, including file formats, maintained by TNA (The National Archives, UK), which can be used in conjunction with another TNA tool DROID (Digital Record Object Identification – that performs automated batch identification of file formats and outputs metadata). From the U.S, Harvard University GDFR (Global Digital Format Registry) and JHOVE (JSTOR/Harvard Object Validation Environment identification, validation, and characterization of digital objects) offer comparable services in support of preservation metadata compilation. Accurate information about the file format is the key to successful long-term preservation.

3.7.3 Most important is that all aspects of preservation and transfer relating to audio files, including all technical parameters are carefully assessed and kept. This includes all subsequent measures carried out to safeguard the audio document in the course of its lifetime. Though much of the metadata discussed here can be safely populated at a later date the record of the creation of the digital audio file, and any changes to its content, must be created at the time the event occurs. This history metadata tracks the integrity of the audio item and, if using the BWF format, can be recorded as part of the file as coding history in the BEXT chunk. This information is a vital part of the PREMIS preservation metadata recommendations. Experience shows that computers are capable of producing copious amounts of technical data from the digitization process. This may need to be distilled in the metadata that is to be kept. Useful element sets are proposed in the interim set AudioMD (http://www.loc.gov/rr/mopic/avprot/audioMD_v8.xsd), an extension schema developed by Library of Congress, or the AES audioObject XML schema which at the time of writing is under review as a standard.

3.7.4 If digitising from legacy collections, these schemas are useful not only for describing the digital file, but also the physical original. Care needs to be taken to avoid ambiguity about which object is being described in the metadata: it will be necessary to describe the work, its original manifestation and subsequent digital versions but it is critical to be able to distinguish what is being described in each instance. PREMIS distinguishes the various components in the sequence of change by associating them with events, and linking the resultant metadata through time.

3.8 Structural Metadata – METS

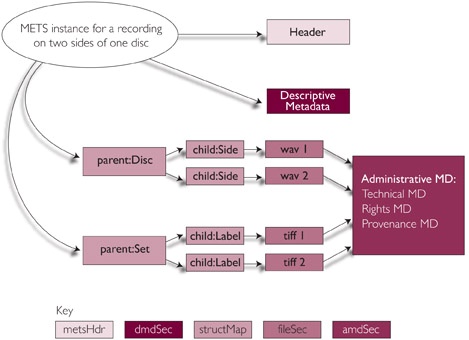

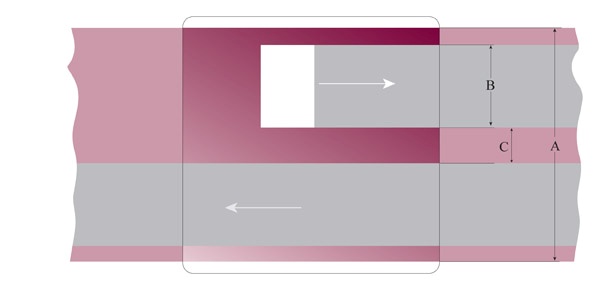

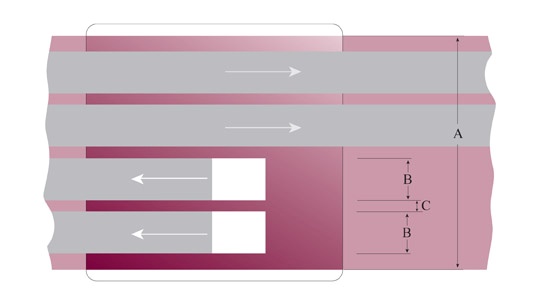

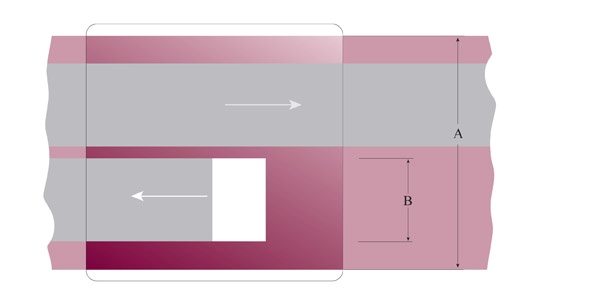

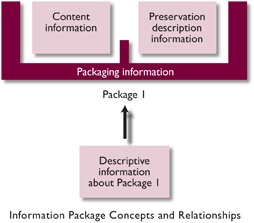

3.8.1 Time-based media are very often multimedia and complex. A field recording may consist of a sequence of events (songs, dances, rituals) accompanied by images and field notes. A lengthy oral history interview occupying more than one .wav file may also be accompanied by photographs of the speakers and written transcripts or linguistic analysis. Structural metadata provides an inventory of all relevant files and intelligence about external and internal relationships including preferred sequencing, e.g. the acts and scenes of an operatic recording. METS (Metadata Encoding and Transmission Standard, current version is 1.7) with its structural map (structMap) and file group (fileGrp) sections has a recent but proven track record of successful applications in audiovisual contexts (see fig. 3).

Fig 3: components of a METS instance and one possible set of relationships among them

3.8.2 The components of a METS instance are:

3.8.2.1 A header describes the METS object itself, such as who created this object, when, for what purpose. The header information supports management of the METS file proper.

3.8.2.2 The descriptive metadata section contains information describing the information resource represented by the digital object and enables it to be discovered.

3.8.2.3 The structural map, represented by the individual leaves and details, orders the digital files of the object into a browsable hierarchy.

3.8.2.4 The content file section, represented by images one through five, declares which digital files constitute the object. Files may be either embedded in the object or referenced.

3.8.2.5 The administrative metadata section contains information about the digital files declared in the content file section. This section subdivides into:

3.8.2.5.1 technical metadata, specifying the technical characteristics of a file

3.8.2.5.2 source metadata, specifying the source of capture (e.g.,direct capture or reformatted 4 x 5 transparency)

3.8.2.5.3 digital provenance metadata, specifying the changes a file has undergone since its birth

3.8.2.5.4 rights metadata, specifying the conditions of legal access.

3.8.2.6 The sections on technical metadata, source metadiata, and digital provenance metadata carry the information pertinent to digital preservation.

3.8.2.7 For the sake of completeness, the behaviour section, not shown above in Fig. 2, associates executables with a METS object. For example, a METS object may rely on a certain piece of code to instantiate for viewing, and the behavior section could reference that code.

3.8.3 Structural metadata may need to represent additional business objects:

3.8.3.1 user information (authentication)

3.8.3.2 rights and licenses (how an object may be used)

3.8.3.3 policies (how an object was selected by the archive)

3.8.3.4 services (copying and rights clearance)

3.8.3.5 organizations (collaborations, stakeholders, sources of funding).

3.8.4 These may be represented by files referenced to a specific address or URL. Explanatory annotations may be provided in the metadata for human readers.

3.9 Descriptive Metadata – Application Profiles, Dublin Core (DC)

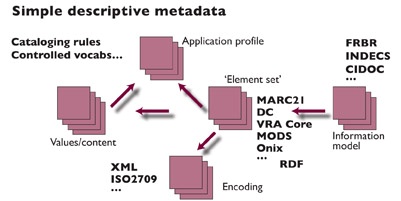

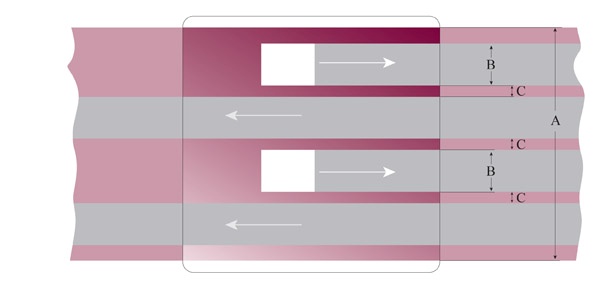

3.9.1 Much of the effort devoted to metadata in the heritage sector has focussed on descriptive metadata as an offshoot of traditional cataloguing. However, it is clear that too much attention in this area (e.g. localised refinements of descriptive tags and controlled vocabularies) at the expense of other considerations described above will result in system shortcomings overall. Figure 4 demonstrates the various inter-dependencies that need to be in place, descriptive metadata tags being just one sub-set of all the elements in play.

Fig 4: simple descriptive metadata (courtesy Dempsey, CLIR/DLF primer, 2005)

3.9.2 Interoperability must be a key component of any metadata strategy: elaborate systems devised independently for one archival repository by a dedicated team will be a recipe for low productivity, high costs and minimal impact. The result will be a metadata cottage industry incapable of expansion. Descriptive metadata is indeed a classic case of Richard Gabriel’s maxim ‘Worse is better’. Comparing two programme languages, one elegant but complex, the other awkward but simple, Gabriel predicted, correctly, that the language that was simpler would spread faster, and as a result, more people would come to care about improving the simple language than improving the complex one. This is demonstrated by the widespread adoption and success of Dublin Core (DC), initially regarded as an unlikely solution by the professionals on account of its rigorous simplicity.

3.9.3 The mission of DCMI (DC Metadata Initiative) has been to make it easier to find resources using the Internet through developing metadata standards for discovery across domains, defining frameworks for the interoperation of metadata sets and facilitating the development of community- or discipline-specific metadata sets that are consistent with these aims. It is a vocabulary of just fifteen elements for use in resource description and provides economically for all three categories of metadata. None of the elements is mandatory: all are repeatable, although implementers may specify otherwise in application profiles – see section 3.9.8 below. The name “Dublin” is due to its origin at a 1995 invitational workshop in Dublin, Ohio;”core” because its elements are broad and generic, usable for describing a wide range of resources. DC has been in widespread use for more than a decade and the fifteen element descriptions have been formally endorsed in the following standards: ISO Standard 15836-2003 of February 2003 [ISO15836 http://dublincore.org/documents/dces/#ISO15836 ] NISO Standard Z39.85-2007 of May 2007 [NISOZ3985 http://dublincore.org/documents/dces/#NISOZ3985 ] and IETF RFC 5013 of August 2007 [RFC5013 http://dublincore.org/documents/dces/#RFC5013 ].

Table 1 (below) lists the fifteen DC elements with their (shortened) official definitions and suggested interpretations for audiovisual contexts.

| DC element | DC definition | Audiovisual interpretation |

|---|---|---|

| Title | A name given to the resource | The main title associated with the recording |

| Subject | The topic of the resource | Main topics covered |

| Description | An account of the resource | Explanatory notes, interview summaries, descriptions of environmental or cultural context, list of contents |

| Creator | An entity primarily responsible for making the resource | Not authors or composers of the recorded works but the name of the archive |

| Publisher | An entity responsible for making the resource available | Not the publisher of the original document that has been digitized. Typically the publisher will be the same as the Creator |

| Contributor | An entity responsible for making contributions to the resource | Any named person or sound source.Will need suitable qualifier, such as role (e.g. performer, recordist) |

| Date | A point or period of time associated with an event in the lifecycle of the resource | Not the recording or (P) date of the original but a date relating to the resource itself |

| Type | The nature or genre of the resource | The domain of the resource, not the genre of the music. So Sound, not Jazz |

| Format | The file format, physical medium, or dimensions of the resource | The file format, not the original physical carrier |

| Identifier | An unambiguous reference to the resource within a given context | Likely to be the URI of the audio file |

| Source | A related resource from which the described resource is derived | A reference to a resource from which the present resource is derived |

| Language | A language of the resource | A language of the resource |

| Relation | A related resource | Reference to related objects |

| Coverage | The spatial or temporal topic of the resource, the spatial applicability of the resource, or the jurisdiction under which the resource is relevant | What the recording exemplifies, e.g. a cultural feature such as traditional songs or a dialect |

| Rights | Information about rights held in and over the resource | Information about rights held in and over the resoure |

Table 1: The DC 15 elements

3.9.4 The elements of DC have been expanded to include further properties. These are referred to as DC Terms. A number of these additional elements (‘terms’) will be useful for describing time-based media:

| DC Term | DC definition | Audiovisual interpretation |

|---|---|---|

| Alternative | Any form of the title used as a substitute or alternative to the formal title of the resource | An alternative title, e.g. a translated title, a pseudonym, an alternative ordering of elements in a generic title |

| Extent | The size or duration of the resource | File size and duration |

| extentOriginal | The physical or digital manifestation of the resource | The size or duration of the original source recording(s) |

| Spatial | Spatial characteristics of the intellectual content of the resource | Recording location, including topographical co-ordinates to support map interfaces |

| Temporal | Temporal characteristics of the intellectual content of the resource | Occasion on which recording was made |

| Created | Date of creation of the resource | Recording date and any other significant date in the lifecycle of the recording |

Table 2: DC Terms (a selection)

3.9.5 Implementers of DC may choose to use the fifteen elements either in their legacy dc: variant (e.g., http://purl.org/dc/elements/1.1/creator) or in the dcterms: variant (e.g., http://purl.org/dc/terms/creator) depending on application requirements. Over time, however, and especially if RDF is part of the metadata strategy, implementers are expected (and encouraged by DCMI) to use the semantically more precise dcterms: properties, as they more fully comply with best practice for machine-processable metadata.

3.9.6 Even in this expanded form, DC may lack the fine granularity required in a specialised audiovisual archive. The Contributor element, for example, will typically need to mention the role of the Contributor in the recording to avoid, for instance, confusing performers with composers or actors with dramatists. A list of common roles (or ‘relators’) for human agents has been devised (MARC relators) by the Library of Congress. Here are two examples of how they can be implemented.

<dcterms:contributor>

<marcrel:CMP>Beethoven, Ludwig van, 1770-1827</marcrel:CMP>

<marcrel:PRF>Quatuor Pascal</marcrel:PRF>

</dcterms:contributor>

<dcterms:contributor>

<marcrel:SPK>Greer, Germaine, 1939- (female)</marcrel:SPK>

<marcrel:SPK>McCulloch, Joseph, 1908-1990 (male)</marcrel:SPK>

</dcterms:contributor>

The first example tags ‘Beethoven’ as the composer (CMP) and ‘Quatuor Pascal’ as the performer (PRF). The second tags both contributors, Greer and McCulloch, as speakers (SPK) though does not go as far as determining who is the interviewer and who is the interviewee. That information would need to be conveyed elsewhere in the metadata, e.g. in Description or Title.

3.9.7 In this respect, other schema may be preferable, or could be included as additional extension schema (as illustrated in Fig. 2). MODS (Metadata Object Description Schema http://www.loc.gov/standards/mods/), for instance allows for more granularity in names and linkage with authority files, a reflection of its derivation from the MARC standard:

name

Subelements:

namePart

Attribute: type (date, family, given, termsOfAddress)

displayForm

affiliation

role

roleTerm

Attributes: type (code, text); authority

(see: http://www.loc.gov/standards/sourcelist/)

description

Attributes: ID; xlink; lang; xml:lang; script; transliteration

type (enumerated: personal, corporate, conference)

authority (see: http://www.loc.gov/standards/sourcelist/)

3.9.8 Using METS it would be admissible to include more than one set of descriptive metadata suited to different purposes, for example a Dublin Core set (for OAI-PMH (Open Archives Initiative Protocol for Metadata Harvesting) compliance) and a more sophisticated MODS set for compliance with other initiatives, particularly exchange of records with MARC encoded systems. This ability to incorporate other standard approaches is one of the advantages of METS.